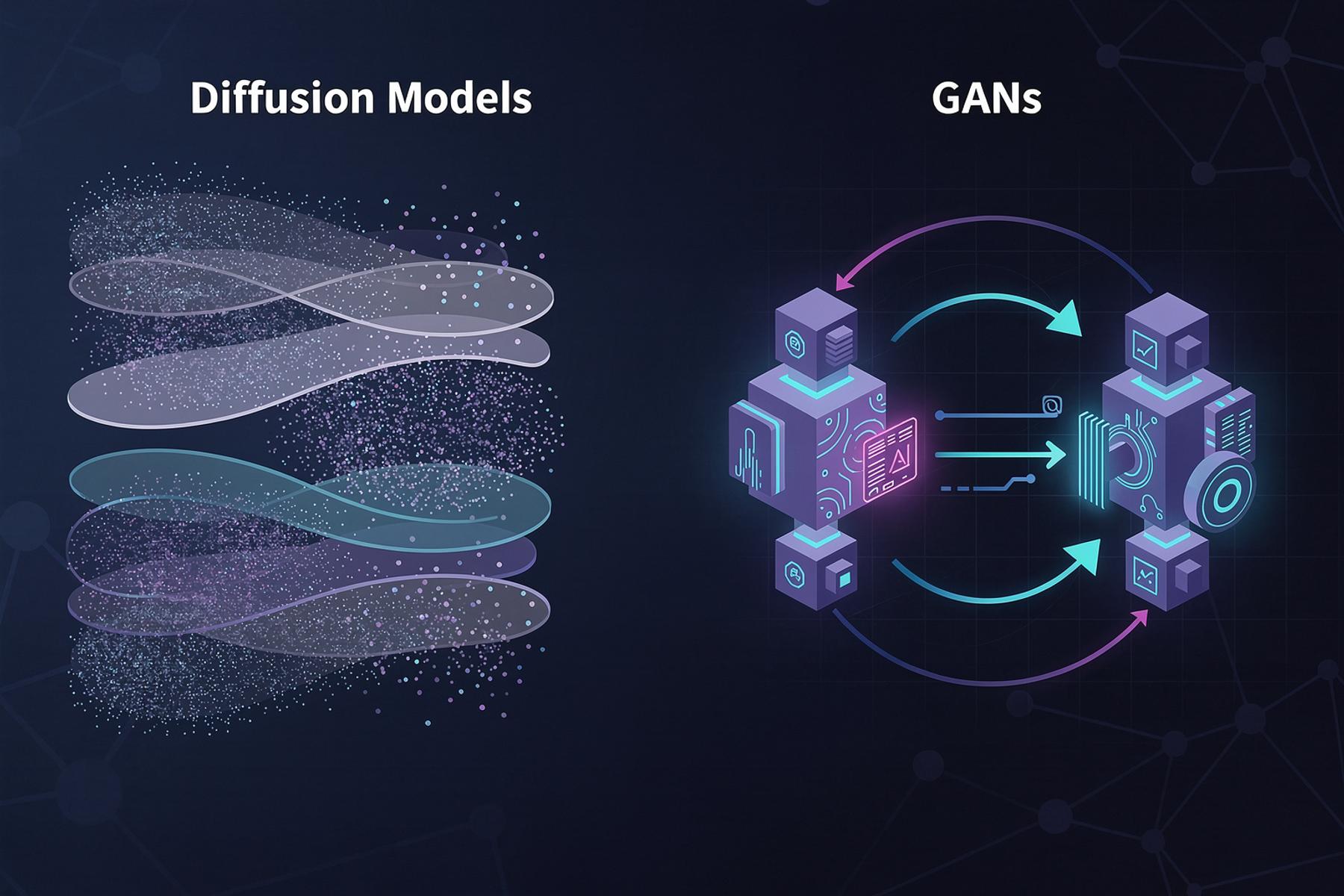

Diffusion Models vs GANs: Key Differences Explained

Explore the fundamental differences between diffusion models and GANs, two leading AI image generation technologies, and how to detect their outputs.

Introduction to AI Image Generation Technologies

Artificial intelligence has revolutionized digital imagery, enabling the creation of highly realistic visuals from simple text prompts. Two dominant technologies powering this transformation are Generative Adversarial Networks (GANs) and Diffusion Models. While both can produce stunning AI-generated content, they operate on fundamentally different principles. Understanding these differences is crucial for professionals in content verification, journalism, and digital media who rely on tools like Detect AI Image to ensure image authenticity.

This article explores the key distinctions between diffusion models and GANs, their strengths and limitations, and practical implications for detecting AI-generated images.

How GANs Work: The Adversarial Approach

Generative Adversarial Networks, introduced by Ian Goodfellow in 2014, consist of two neural networks locked in competition:

- Generator: Creates images from random noise, aiming to produce realistic outputs

- Discriminator: Evaluates images, distinguishing between real and generated examples

Key Characteristics of GANs

- Adversarial Training: The generator improves by fooling the discriminator, while the discriminator becomes better at detection

- Fast Generation: Once trained, GANs can produce images in a single forward pass

- Mode Collapse: May produce limited variety in outputs, focusing on “safe” examples that fool the discriminator

- Training Instability: Requires careful balancing between generator and discriminator

Practical Examples of GANs

- StyleGAN: Used for generating highly realistic human faces (e.g., ThisPersonDoesNotExist.com)

- CycleGAN: Transforms images between domains (e.g., turning horses into zebras)

- Pix2Pix: Converts sketches into photorealistic images

GAN-generated images often exhibit subtle artifacts like:

- Unnatural textures in backgrounds

- Asymmetrical facial features

- Blurry or distorted fine details

Diffusion Models: The Gradual Denoising Process

Diffusion models, popularized by research from OpenAI and Google in 2020-2021, take a fundamentally different approach:

- Forward Process: Gradually adds noise to an image until it becomes pure random noise

- Reverse Process: A neural network learns to reverse this noise addition, reconstructing images from noise

Key Characteristics of Diffusion Models

- Iterative Generation: Produces images through many small denoising steps (typically 25-1000)

- Stable Training: More predictable than GANs, with less risk of mode collapse

- High-Quality Outputs: Often produces more detailed and diverse images

- Computationally Intensive: Requires more resources during both training and generation

Practical Examples of Diffusion Models

- Stable Diffusion: Open-source model capable of generating diverse image types

- DALL-E 2: OpenAI’s model for creating images from text descriptions

- Imagen: Google’s text-to-image diffusion model

Diffusion model outputs may show:

- Overly smooth textures in some areas

- Inconsistent details when zoomed in

- Artifacts in complex compositions

Key Differences Between Diffusion Models and GANs

| Feature | Diffusion Models | GANs |

|---|---|---|

| Training Approach | Gradual denoising process | Adversarial competition |

| Generation Speed | Slower (many iterative steps) | Faster (single forward pass) |

| Training Stability | More stable, predictable | Less stable, requires careful balancing |

| Output Quality | Generally higher quality, more diverse | Can be high quality but prone to artifacts |

| Resource Usage | Computationally intensive | Less resource-intensive |

| Mode Coverage | Better at covering diverse outputs | Prone to mode collapse |

| Flexibility | Easier to adapt to new tasks | Requires architecture adjustments |

Detecting AI-Generated Images from Different Models

As AI image generation advances, distinguishing between real and synthetic content becomes more challenging. However, each model leaves unique fingerprints that tools like Detect AI Image can identify:

GAN Detection Characteristics

- Texture Inconsistencies: Unnatural patterns in backgrounds or surfaces

- Facial Asymmetry: Subtle mismatches in eyes, ears, or facial features

- Artifact Patterns: Repeating patterns or blurry areas

- Color Distribution: Unnatural color gradients or saturation

Diffusion Model Detection Characteristics

- Over-Smoothing: Some areas appear too smooth or plastic-like

- Detail Inconsistencies: Fine details may not align perfectly

- Composition Issues: Complex scenes may have logical inconsistencies

- Lighting Artifacts: Unnatural shadows or reflections

Practical Detection Tips

- Examine Fine Details: Zoom in on textures, hair, or fabric patterns

- Check Symmetry: Look for asymmetrical features in faces or objects

- Analyze Backgrounds: AI-generated backgrounds often contain artifacts

- Verify Context: Does the image make logical sense in its composition?

- Use Detection Tools: Combine manual inspection with tools like Detect AI Image for comprehensive verification

Practical Applications and Industry Impact

Journalism and Media Verification

Journalists increasingly rely on AI detection tools to:

- Verify user-submitted content on social media

- Authenticate images in breaking news situations

- Identify potential deepfakes in political or sensitive contexts

“In an era of misinformation, tools that can distinguish between real and AI-generated images are essential for maintaining journalistic integrity,” notes Sarah Chen, Digital Editor at Global News Network.

Academic Integrity

Educational institutions use AI detection to:

- Verify student artwork submissions

- Detect AI-generated images in research papers

- Maintain academic honesty in digital media courses

Content Creation and Copyright

Content creators and marketers benefit from:

- Verifying the origin of stock images

- Ensuring proper attribution for AI-generated content

- Protecting original work from unauthorized AI training

The Future of AI Image Generation and Detection

As both generation and detection technologies evolve, we can expect:

- More Sophisticated Models: Future AI generators will produce even more realistic images

- Improved Detection: Tools like Detect AI Image will enhance their algorithms to keep pace

- Regulatory Developments: Potential requirements for AI-generated content labeling

- Hybrid Approaches: Combining the strengths of diffusion models and GANs

Researchers are also exploring:

- Watermarking Techniques: Embedding invisible markers in AI-generated images

- Metadata Standards: Developing protocols for AI content identification

- Collaborative Detection: Community-driven approaches to identifying AI artifacts

Best Practices for Image Verification

- Combine Multiple Methods: Use both manual inspection and automated tools

- Check Image Metadata: Look for AI-related metadata or inconsistencies

- Reverse Image Search: Verify if the image appears elsewhere online

- Consider the Source: Evaluate the credibility of the image provider

- Use Specialized Tools: Leverage platforms like Detect AI Image for comprehensive analysis

- Stay Informed: Keep up with advancements in AI image generation

- Contextual Analysis: Consider whether the image makes logical sense

Conclusion

While both diffusion models and GANs produce impressive AI-generated content, their underlying mechanisms lead to distinct characteristics in their outputs. Diffusion models generally offer higher quality and more diverse images but require more computational resources, while GANs provide faster generation at the potential cost of some output quality and variety.

For professionals in journalism, education, and content creation, understanding these differences is crucial for effective image verification. Tools like Detect AI Image play a vital role in maintaining digital authenticity by identifying the subtle artifacts left by different AI generation techniques.

As AI image generation continues to advance, staying informed about these technologies and their detection methods will be essential for anyone working with digital imagery. Whether you’re verifying news photos, assessing student submissions, or creating original content, the ability to distinguish between real and AI-generated images remains a critical skill in the digital age.